The Revenue Attribution Problem: Why Your Popup Software Data May Be Giving You Inflated Numbers

Read summarized version with

A few weeks ago, we had a conversation with the marketing team from a Shopify store doing nearly $3 million in annual revenue.

Their head of marketing pulled up their analytics and showed some unbelievable numbers: when they added up the "attributed revenue" from Google ads, social media ads, email marketing platform, and their popups, they were claiming credit for over $10 million in sales.

“If we added up the revenue claimed by all platforms we're using, it would look like we made about $15 million...” he said. “We must be the most successful marketers in history!"

He was right to be skeptical.

Many marketing platforms are designed to prove their own revenue impact to users, and often that also means not to give a single source of truth.

And if popup software is your only source of revenue attribution data, you might be seeing the same inflated numbers that challenge marketers across the entire industry.

In this post, I'll explain common issues in popup revenue attribution and share a few methods to measure (and improve) the real impact of this channel on ecommerce sales.

The multi-platform attribution crisis

Of course, every marketing platform out there is incentivized to inflate their contribution to the revenue of their users.

Google gets paid more when their ads appear successful. Some email marketing platforms renew contracts based on attributed revenue. Facebook increases spend when their ROAS looks good.

Remember the infamous lawsuit against Facebook a few years ago? The one that revealed the company knowingly inflated ad metrics to boost its own revenue?

So... What about popup tools?

Well, it’s pretty much the same story.

The result is what we're seeing across the industry: almost a complete breakdown of meaningful attribution measurement. Your popup campaigns are just one piece of a much larger problem that's getting worse as iOS changes and cookie deprecation make tracking more challenging.

But since website popups are our speciality, let's focus on what this means for your popup strategy specifically.

Here's what's actually happening in your customer's journey to purchase:

Stage 1:

Sarah discovers your brand through a Facebook ad

Stage 2:

She visits your Shopify store, sees a welcome popup, decides to sign up, and gets a 10% discount. But she doesn't buy anything and leaves.

Stage 3:

Three days later, she gets a marketing email about a limited-time sale you’re having. She clicks through, browses, but leaves again without buying anything. The discount code she obtained from the welcome popup has expired at this point.

Stage 4:

Two days after that, she searches for your brand on Google, and visits your store again. She browses a few pages, hesitates, decides to leave but sees an exit-intent offer with another time-limited discount code. She finally makes a purchase.

So—

Who gets credit for that sale?

If you're like most businesses, everyone does.

Facebook claims it because of the initial touchpoint, which is true. Google attributes it to the brand search, which is also a valid argument. Your email platform counts it as an email conversion because of that email informing Sarah about the sale. And your popup tool shows it as "attributed revenue" because she interacted with the exit-intent popup.

One sale. Four platforms claiming full credit. The numbers don't quite add up, but the marketing budgets feel the impact.

The false confidence trap

The problem with popup revenue attribution goes deeper than simple double-counting and over-reporting. Most popup platforms are designed to make you feel good about your investment, not give you accurate business intelligence.

Let me give you an example.

Brand X had a cart recovery popup campaign that showed $20,000 in attributed revenue over three months. And none of those months were during the holiday season or other peak sales periods.

That’s an unbelievable performance, right?

But when we dug deeper into their popup tool’s analytics dashboard, we found something strange: the attribution window was set to 180 days.

Think about that for a moment.

If someone used a discount from a popup, say, in May and made a purchase in June after seeing multiple retargeting ads, receiving a dozen emails, and possibly even visiting the brand’s brick-and-mortar store, should that exit popup really get credit for the conversion?

The answer is obvious here, but the default settings in many ecommerce popup tools aren't.

Many platforms set attribution windows at 7, 30, or even 180 days by default, creating the illusion of impact long after your popup's actual influence has faded. They do this because longer windows mean higher attributed revenue numbers, which makes users feel better about your investment.

Here's what we've learned from working with hundreds of ecommerce brands:

Popup campaigns are immediate conversion tools.

They work in the moment or within the first 24-48 hours. If someone converts a week after seeing your popup, there’s a chance that something else likely drove that purchase, like your email nurture workflow, retargeting ads, or Google search.

Why attribution windows matter more than you think

Let me begin with another example.

Brand B, a premium home goods brand, was struggling with this exact issue: their popups showed impressive revenue attribution with a 7-day attribution window, but their head of ecommerce thought that many clients would have bought anyway, so those performance numbers weren’t quite accurate.

Just because someone clicked a popup and later made a purchase doesn't necessarily mean the popup led to the purchase. They might have been ready to buy regardless.

They might have been influenced by your emails, retargeting ads, or something else. A popup might have just been the last thing they touched before converting.

For popup campaigns specifically, we recommend attribution windows of 3-5 days maximum for most ecommerce stores.

Why?

Because, again, popups are designed for immediate action. They capture visitors in the moment of engagement on your website. If your popup isn't driving action within a few days, it's probably not an important factor in their decision to buy.

One luxury fashion brand discovered this the hard way.

They had a discount popup for new visitors with a 14-day attribution window that appeared to generate 12% of their monthly revenue. When they shortened the window to just three days, the attributed revenue dropped to around 5%. That was a more realistic reflection of the popup's actual impact.

But even that 5% might still be inflated a bit, because attribution windows still don't solve the core problem: they can't tell you if sales would have happened anyway.

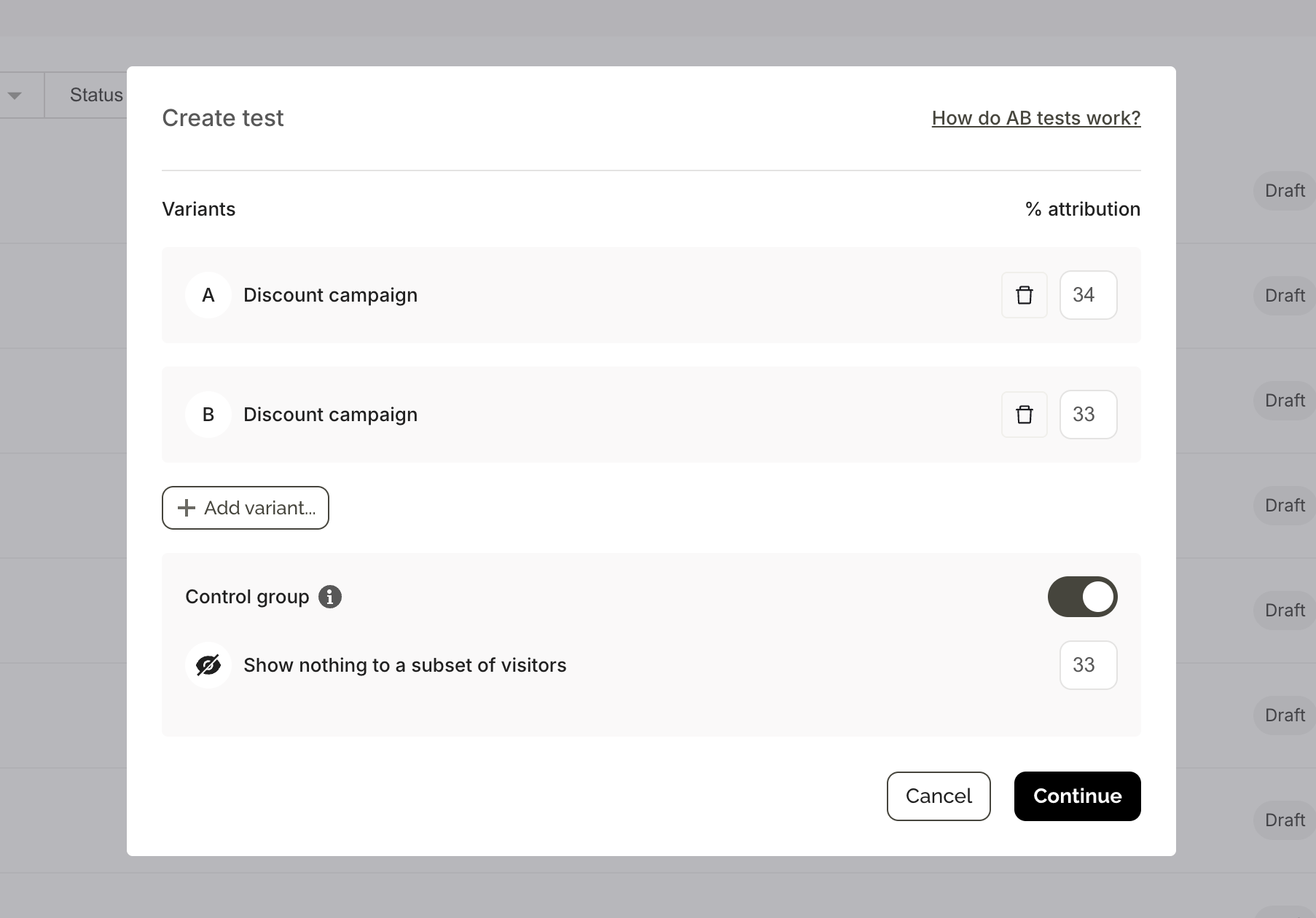

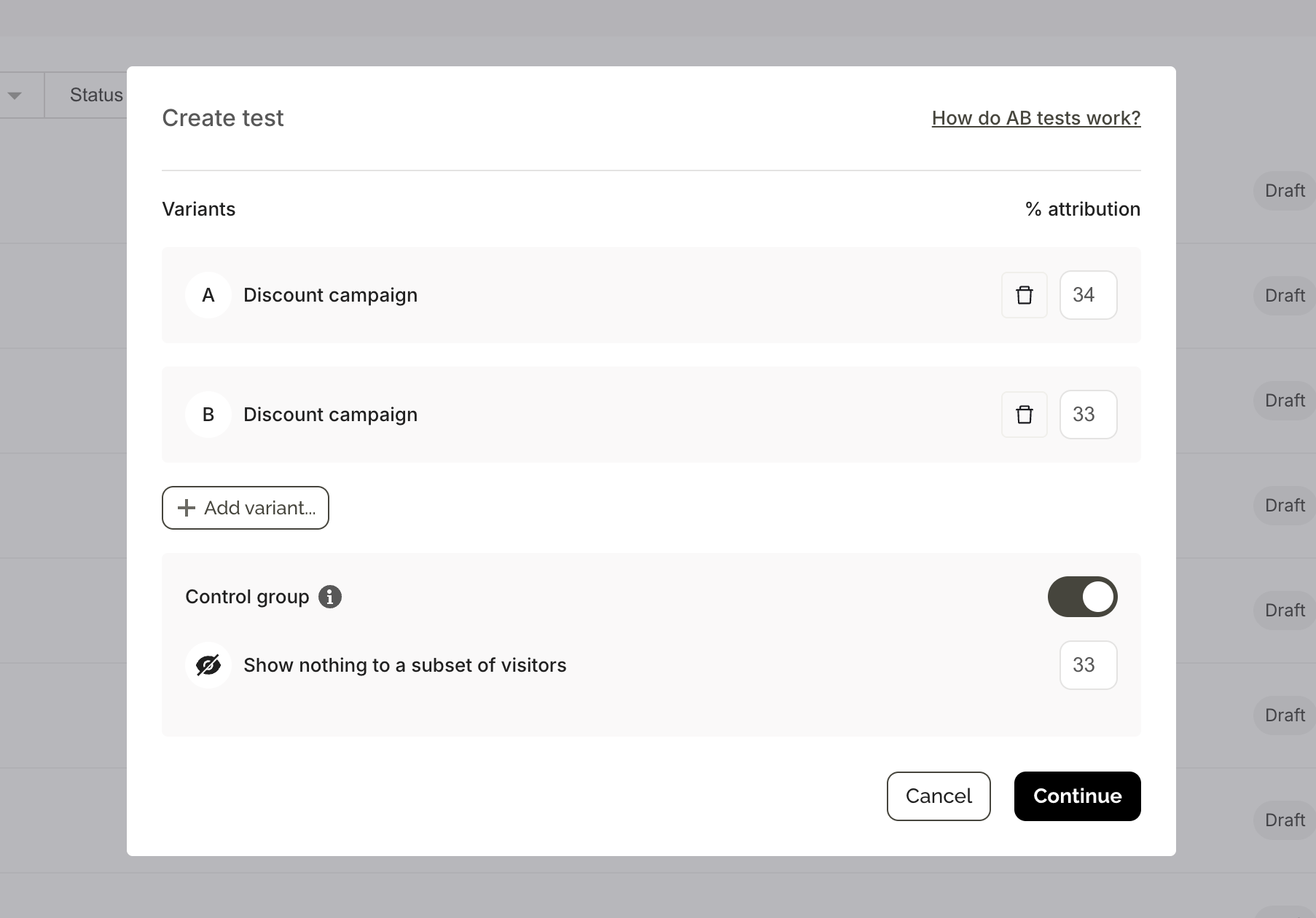

A/B testing popups with a control group: the only way to know for sure

There's only one way to know if your popups are actually driving incremental revenue: test them against doing nothing at all.

We do so with A/B testing against a control group, or incremental testing.

It's an effective way for measuring true popup campaign impact. In other words, instead of asking "how much revenue can we attribute to this popup?" we're asking "how much additional revenue does this popup actually generate?"

Let me give you an example.

Brand C, a fashion store on Shopify, was convinced their welcome popup with a discount was doing a great job. The attribution data looked great, indeed: a 9% conversion rate and over $200,000 in attributed revenue over about six months.

But, again, their head of e-shop wanted evidence that the campaign was actually generating sales, not just taking credit for purchases that customers would have made anyway.

To find that out, we set up an A/B test where 50% of visitors saw that welcome campaign and 50% saw nothing.

The results were pretty interesting.

The group that saw the campaign generated 17% more revenue than the control group. That’s still significant, but nowhere near the original $200,000. Now, they knew exactly how much that campaign was helping to make.

Real revenue attribution problems we’ve seen

The attribution problem is not getting better.

With iOS privacy updates limiting tracking and Google still not entirely clear on what will happen to third-party cookies, marketing tools are becoming more aggressive about claiming credit for conversions they may not have influenced.

Here’s a couple more cases we’ve analyzed.

Case I

One Shopify Plus brand we work with discovered they were overestimating the financial impact of one important lead generation popup. It was set with a 30-day attribution window, and it appeared to drive nice revenue.

But many of those sales came from repeat buyers who would have purchased anyway.

We decided to fix this by implementing an A/B test with a control group test. Once the statistical significance was reached, we saw that the story was different.

The popup wasn’t lifting results—the performance was almost flat.

But ultimately, it was a good thing:

When we re-tested with a different display timing and visitor targeting (waiting about 40 seconds before showing the campaign—we’ve seen that boost engagement in a study we did earlier), the same lead generation popup started delivering noticeable incremental revenue lift.

Case II

Another ecommerce brand from the fashion industry found that their popups were converting well during summer months but the performance was average in winter—not because the campaigns performed differently, but because their email marketing was more aggressive during holiday seasons.

The popups were getting credit for a portion of email-generated sales simply because shoppers happened to have seen a popup at some point during their longer purchase journey.

These aren't edge cases. They're the predictable result of an attribution system designed to make ecommerce marketing tools look good rather than provide accurate, insightful business intelligence.

Building an attribution framework that works

Here are my tips to implement an attribution system that helps make better marketing decisions, based on my experience working with Shopify businesses on their popup strategies.

Start with incremental testing (A/B testing with control groups)

Before optimizing any popup campaign, run it against a control group to establish baseline incremental impact. This becomes your starting point for measuring performance. However, be mindful that your test must get enough visitors to reach statistically significant results within a reasonable period of time.

Easily determine how long your A/B test with a control needs to run with an A/B test calculator for statistical significance:

Calculator

Results

Variant B vs Variant A (Control)

Set realistic attribution windows

For most popup campaigns on ecommerce stores, we recommend 3-5 days. For high-consideration purchases like furniture, bikes, or luxury goods, you might extend to 7 days in some cases. Shorter windows give more accurate data about immediate popup impact.

Track both attributed and incremental metrics

Keep your traditional attribution data for trend analysis, but always include the incremental lift data from A/B and control group tests. This gives you both the full customer journey view and the true campaign impact.

Segment your campaigns and data by visitor group

Your new visitors might convert from a welcome popup, while returning customers might use the popup as confirmation of a decision they'd already made. Consider making dedicated campaigns for different visitor groups to understand the real impact.

Coordinate with other marketing channels

Work with your email, paid ads, and other teams to avoid revenue attribution overlap. If someone clicks your popup and then converts through an email, decide upfront how to allocate credit before it becomes an issue.

Create reporting dashboards that show reality

Instead of reporting "attributed revenue," create dashboards like "incremental revenue from A/B testing" and "estimated total impact range." This way, you’ll give your leaders honest data to make good decisions about popup tool investment.

The bottom line

The overreporting issue isn't unique to popups and other onsite campaigns—it's happening across most digital marketing channels as the industry faces a measurement crisis.

But honest measurement makes you more competitive.

When you know which campaigns drive incremental impact, you can:

Allocate budget to effective tactics

Optimize campaigns based on real performance data, not vanity metrics

Present ROI numbers that leadership can actually trust

Build popup strategies that complement (not compete) with other channels

Make better strategic decisions as privacy changes disrupt traditional attribution

The goal is to make your popups actually beneficial for your ecommerce growth.

And that starts with measuring what matters: the additional revenue your popups generate, not just the revenue they happen to touch along the way.

Get started

in minutes

Start converting more visitors today.

Get started in minutes and see results right after.